Member-only story

Running the Phi-2 LLM on Ollama !

Large Language Models (LLMs) are fascinating, and their benefits are obvious and well discussed all over the internet. However, it’s also crucial to understand the mechanics behind these models. This knowledge is not just interesting — it’s essential for security purposes and effective application development.

I’m not pretending to understand the inner workings of LLM’s, but I’m convinced playing around and learn about the tooling available is a good step forward on our shared journey.

An easy way to get started is always some easy working examples.

As I am a big fanatic of containers and kubernetes, let’s look for a container example.

An interesting project is https://ollama.ai/. It offers an docker image we can leverage and good instructions.

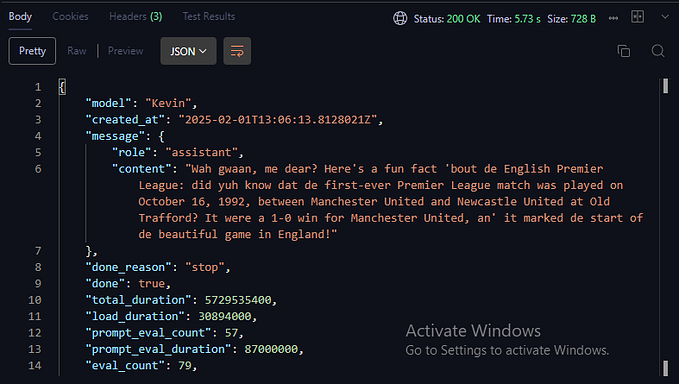

So let’s get started. When things start running, we basicly have a container running that can be interacted with via an API or the CLI. We’l use the CLI for now.

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollamaTake note that running these systems locally might require some disk space and cpu.

Once we have our container up and running, we need to download and run the LLM of our choice.

docker exec -it ollama…